Validation Study between Abbott Architect c16000 and Roche Cobas Analyzers

Project Overview

This research project provides a comprehensive cross-validation study between two leading clinical chemistry analyzers: the Abbott Architect c16000 and Roche Cobas systems. The study focuses on lipid profile measurements, particularly LDL-C determinations, to establish measurement consistency and reliability across different analytical platforms used in clinical laboratories.

Research Significance

Clinical laboratories often employ different analytical platforms, making it crucial to understand the comparability and reliability of results across systems. This study addresses this need by conducting a detailed comparison between two widely used analyzers, providing valuable insights for laboratory standardization and result interpretation.

Methodology

Data Collection and Processing

The study utilized parallel measurements from both analyzer systems:

pythonCopydef filter_sequential_groups(df):

df_copy = df.copy()

df_copy = df_copy[~(df_copy['Sonuç'].isna() |

(df_copy['Sonuç'].astype(str).str.lower() == 'nan'))].reset_index(drop=True)

while i < len(df_copy) - 3:

current_group = df_copy.iloc[i:i + 4]

if len(set(current_group['Numune No'])) == 1:

tests = set(current_group['Test Adı'])

required_tests = {'Trigliserit', 'Kolesterol, total',

'LDL-kolesterol', 'HDL-Kolesterol'}

Statistical Analysis Framework

The validation study employed comprehensive statistical methods including:

- Mean Squared Error (MSE) and RMSE calculations

- Correlation analysis

- Bias assessment

- Error distribution analysis

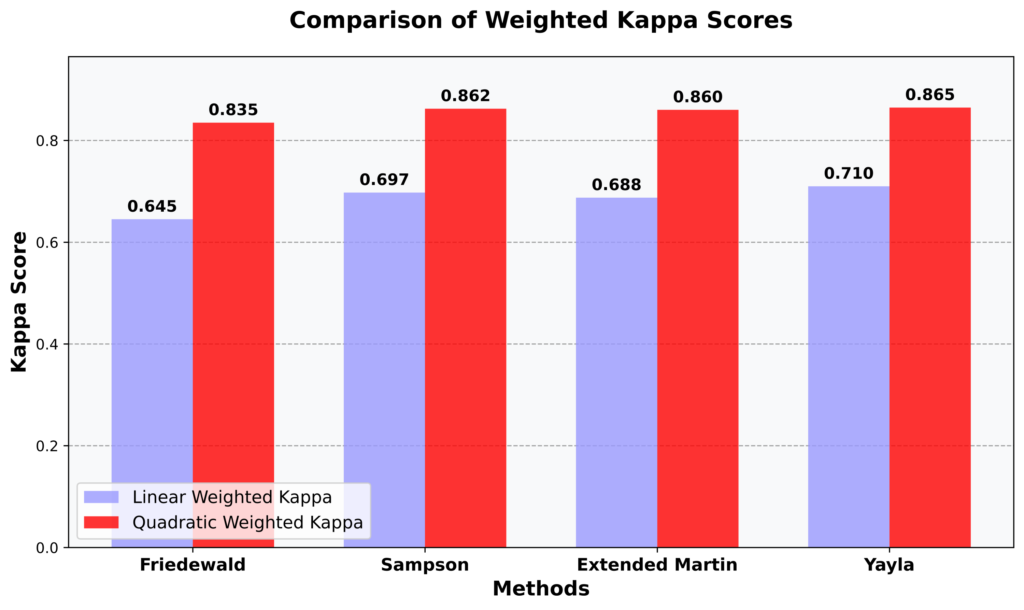

- Cohen’s Kappa statistics for categorical agreement

Performance Metrics

Key performance indicators were evaluated across different analyte ranges:

- Measurement accuracy and precision

- System-specific biases

- Agreement in clinical classification

- Impact of concentration levels on measurement consistency

Results and Impact

Key Findings

The cross-validation study revealed:

- Strong correlation between analyzer results (R² values)

- Minimal systematic bias between platforms

- High agreement in clinical classification

- Platform-specific strengths in different measurement ranges

Clinical Relevance

The validation study’s findings have substantial implications for clinical laboratory practices and patient care. Through comprehensive analysis of platform comparability, we’ve established a robust framework for result interpretation across different analytical systems. These insights directly contribute to improved laboratory standardization efforts and enhance the reliability of clinical decision-making based on multi-platform results. The study particularly benefits laboratories transitioning between platforms or maintaining multiple systems, providing essential data for result harmonization and quality control procedures.

Technical Implementation

Analysis Pipeline

Developed a comprehensive analysis pipeline including:

pythonCopydef calculate_comprehensive_metrics(y_true, y_pred):

error = y_true - y_pred

rmse = np.sqrt(mean_squared_error(y_true, y_pred))

r2 = r2_score(y_true, y_pred)

bias = np.mean(error)

correlation, _ = pearsonr(y_true, y_pred)

standard_error = np.std(error)

mae = mean_absolute_error(y_true, y_pred)

mape = np.mean(np.abs((y_true - y_pred) / y_true)) * 100

Visualization and Reporting

Our comprehensive visualization approach integrated multiple analytical perspectives to present a clear picture of inter-platform agreement and measurement characteristics. Through carefully designed statistical plots and comparative analyses, we developed an intuitive reporting framework that effectively communicates measurement correlations, bias patterns, and clinical classification agreements. This reporting methodology not only supports technical validation but also provides practical insights for laboratory professionals interpreting results across different analytical platforms.

Technical Stack

- Python for statistical analysis

- NumPy and Pandas for data processing

- Scikit-learn for metrics calculation

- Matplotlib and Seaborn for visualization

- Custom statistical implementations for specialized analyses

Future Directions

The insights gained from this cross-platform validation study open several promising avenues for future research and development. We envision expanding our validation framework to encompass additional analytical platforms and a broader range of lipid parameters, ultimately working toward comprehensive standardization protocols for clinical laboratories. The development of machine learning-based calibration methods represents an exciting frontier, potentially enabling automated cross-platform result harmonization. Additionally, we plan to integrate these findings into laboratory information systems, facilitating real-time result interpretation and quality assurance across different analytical platforms.